CSAM, Adalytics and The Path forward for our Industry

In digital media, there are few absolutes, this is one of them

The most recent Adalytics report yet again revealed many gaps in the safety and security protocols that marketers rely upon to ensure that there advertisements end up in the environments that they want them to and avoid finding their way to environments where one single screen shot could irrevocably damage a company’s reputation or have a negative impact on share price.

The most pernicious of all of the ills that can befall a marketer from unmonitored programmatic media investments is to have their ad show up and therefore their advertising investments fund CSAM. For those of you unfamiliar with the acronymn, I asked PerplexityAI to craft an executive level summary:

Child Sexual Abuse Material (CSAM) refers to any sexually explicit content involving a minor, such as photographs, videos, or computer-generated imagery. It documents the sexual abuse or exploitation of children and is illegal worldwide. The term “CSAM” is preferred over “child pornography” to emphasize the abusive nature of the material and avoid implications of consent, as children cannot legally consent to such acts. CSAM perpetuates harm by revictimizing children each time the material is shared and fuels further exploitation

Morally, reputationally and from a regulatory perspective, CSAM is the absolute third-rail of the digital media ecosystem and we as an industry need to have a ZERO TOLERANCE standard regarding any ads, and all it takes is one, from ever running in environments that profit from the sexually abuse of minors.

The Adalytics study uncovered precisely that. Not in huge numbers, but with sufficient scale for any well governed marketer to start asking tough questions to ensure they are not exposing their company to disproportionate reputational and financial risk and their CEO to some very pointed questions from his or her Board of Directors.

I thought this was a no-brainer. Any media professional who knows what they’re doing would immediately - and quietly - start enquiring about their digital ad investments and any potential exposure. While many who I’ve spoken to have done just that, I’ve also seen a flurry “yeah but” coming from corners of the digital ecosystem who seem to be bending over backwards to cite complexity or extenuating circumstances or scale as reasons that marketers should get too worked up about the Adalytics findings. They also go out of their way to cast doubt on the motives and methodologies of Adalytics (hint, take a look at the screen shots from the Adalytics report on the link above if you have any doubts (but don’t do it from your browser)) It’s almost like they’re reading from the same talking points memo too, but that’s just speculation on my part.

I’ve also been surprised by the silence from the trade associations that are at the center of our digital ecosystem, both buy side and sell side. One trade association CEO is actually even going so far as to make ad hominem attacks on me for advocating against CSAM and its traffickers??

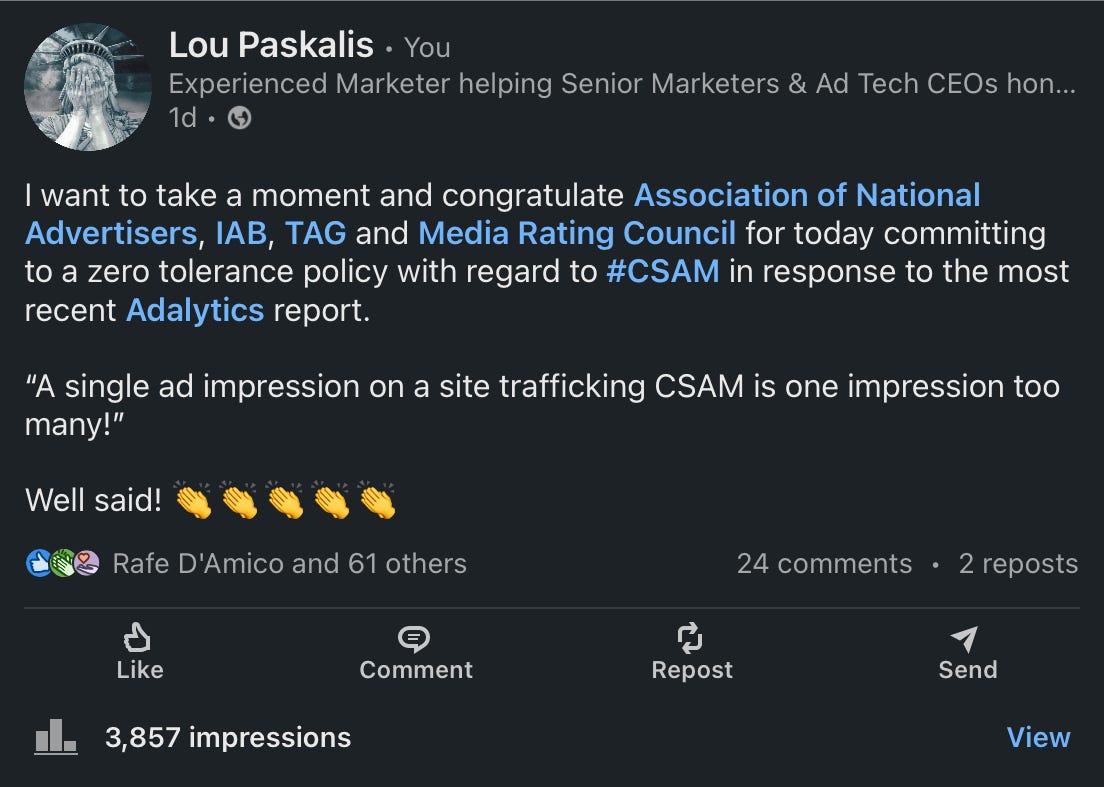

In response, yesterday, for the first time ever, I posted something on LinkedIn to drive home the point that now is not the time for trade associations to hide in the herd and hope it all blows over. Specifically I congratulated the trade associations for something that they are actually yet to do, come out and reaffirm that CSAM should be met by a ZERO tolerance policy from every quarter of our business (see screen shot below). Here’s link to the post itself if you’re in agreement with that and want to give it a like.

This is not a nuanced issue. This is not a complex issue. This is not a minor issue (well, it is actually, but I am not going to make that pun here). This is an all hands on deck issue that demands everyone raise their voices and assert that it is intolerable that marketers ads can potentially end up on the very worst sites and marketers’ ad dollars could be funding the sexual exploitation of children. I don’t care - and neither should you - if it’s less than a thousand ad impressions or less than $5 in contribution wrong is wrong and this is as wrong as it gets. You really have to question the judgement of anyone who says otherwise.

This is what happens when you decouple audience from content and set that notion loose in a largely unregulated ecosystem under tech oligopolies. To solve this, philosophically we can't continue to sever audiences from the publishers that aggregate and cultivate them. If we do, we're going to keep the incentives in the wrong places and you'll continue to see more support of CSAM, terrorism, extremism and more.

I am deeply disturbed to read the list of ad tech vendors and major advertisers reportedly confirmed to have served ads on sites hosting CSAM. WTF??🧐🤨😡

This is absolutely unacceptable and raises serious questions about the integrity of the digital advertising ecosystem.

It’s no secret that these key players have always been and continue to be complicit in a race to the bottom when it comes to ad quality control—whether it’s the ads being served, the consumer experience, or the lack of transparency and accountability in areas like brand safety, ad fraud, and inventory quality. The root cause? A systemic laziness and an overreliance on inflated metrics and misleading analytics that prioritize appearances over real, meaningful outcomes.

This isn’t just negligence—it’s a failure of responsibility at every level. The industry must do better.

What worries me even more is the role AI will play in accelerating these problems. As AI-driven tools become more prevalent in ad targeting, content moderation, and performance measurement, the risks of misuse, oversight, and unintended consequences grow exponentially. Without robust ethical frameworks, transparency, and accountability, AI could amplify the very issues we’re already failing to address—making it easier to exploit loopholes, manipulate data, and further erode trust in the system.

Thanks for sharing this Lou!